Resources

What Can AI Do? IBM – Agentic AI Workshop

Japhia Loo

July 28, 2025

AI has taken the world by storm, and businesses are racing to find meaningful ways to harness its power. With new tools and capabilities emerging almost daily, keeping up with the pace of innovation is both a challenge and a necessity.

Earlier this year, IBM held an Agentic AI Roundtable in Bracknell, presenting concepts, demos, and use-cases reflective of how Agentic AI is poised to reshape the way we build intelligent applications.

The session brought together participants from Bell Integration and Naviam for an in-depth workshop on Agentic AI—a next-generation approach to building intelligent systems.

Today’s common LLM-based applications are designed with a linear flow: users input queries, and the system moves through a fixed sequence of steps before returning an output. These workflows are predictable and manageable, but they often require human oversight if something goes wrong or if additional information is needed.

Agentic AI systems, by contrast, are dynamic and adaptive. Instead of following a hard-coded path, they evaluate the current context, determine what additional information (if any) is needed, choose which tools to invoke, and revise their plan if needed. They don’t just follow instructions; they reason about what to do next.

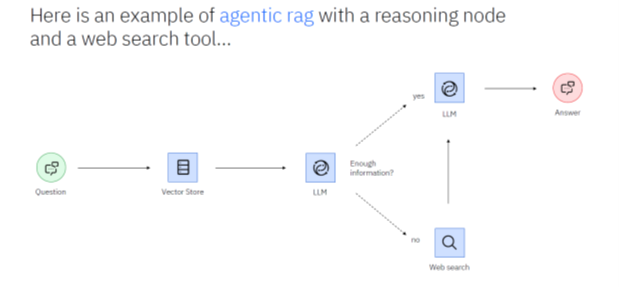

A good example of this evolution is in Retrieval-Augmented Generation (RAG) systems. A RAG system works by combining a retrieval component with a language model, allowing the model to access external knowledge sources in real time instead of relying solely on its internal training data. Traditional RAG involved querying a vector store, retrieving the top-k results, and passing that information to the LLM for answer generation.

In an Agentic RAG system, the process starts similarly but then has a small twist. The agent evaluates whether the retrieved documents are sufficient. If not, it might initiate a web search or query another database. It may even call another agent to assist. The key difference: the agent makes the decisions autonomously, optimizing the workflow in real time.

Lendyrx is a financial application built by IBM using an agentic approach. This system allows users to interact conversationally with their banking data. Under the hood, multiple agents work together to fulfil requests:

This system is built of LangGraph, an agentic framework where each step (planner -> executor -> tools) retains and uses historical context. Each agent has a small prompt-based descriptor that helps the Planner Agent decide which one to use based on user input.

Why not just use a single LLM? Because decomposing tasks across multiple agents allows for better specialization, error detection, and autonomy. For example, in an IBM demo, there was a code generator agent and a separate code executor agent that ensures the code works. Without this setup, a human would have to validate every result.

That said, there are limitations to it. If too many agents are used, performance can degrade—not just in terms of system speed, but also in reliability and output quality. Each agent typically operates in its own loop of planning, executing, and reflecting, and when multiple agents are orchestrated together, they can create coordination overhead, resource contention, or even conflicting decisions if not managed carefully. This can slow down workflows, introduce redundant steps, or cause agents to “step on each other’s toes” when accessing shared tools or data. It’s important to design workflows thoughtfully to ensure that each agent has a clear role and scope. Balancing complexity against functionality is key to building agentic systems that are both powerful and performant.

One of the most compelling promises of Agentic AI is automation. IBM estimates that AI could automate up to 70% of business tasks. The workshop highlighted two strong use cases:

The result is faster, more consistent handling of claims with reduced human overhead.

Agentic systems offer flexibility, but with that comes complexity. Ensuring the accuracy of outputs remains critical. Workshop speakers recommended setting boundaries for what LLMs can and can’t do. Using proven models and fine-tuning prompts are essential for consistency.

Validation remains one of the hardest challenges. A case from Bell Integration illustrated this vividly: a linear RAG setup performed well in demos but failed in production because it lacked robust, real-world accuracy checks. xA proposed fix from IBM: Introduce a validation node—an additional step in the workflow that compares agent-generated answers to a trusted ground truth before accepting them. It's a simple but powerful way to reduce false confidence in LLM outputs.

There’s also promising research into using reinforcement learning (RL) to help agents become more resilient and self-correcting. In this context, RL allows agents to receive feedback on their actions—rewarding good outcomes and penalizing poor ones—so they can refine their strategies over time. It opens the door to agents that can learn from experience rather than relying solely on static instructions, making them more adaptive in dynamic business environments.

Agentic AI represents a shift in how we think about AI applications: from static, rule-based pipelines to intelligent, adaptive systems capable of planning, acting, and learning. The workshop was an eye-opener—not just for showcasing technical capabilities, but for highlighting the strategic, design, and ethical considerations that come with adopting these systems.

The message was clear: success with agentic AI isn't just about building more sophisticated tools, but about designing systems that are robust, trustworthy, and aligned with real-world needs. As organizations move from experimentation to implementation, the challenge will lie in scaling thoughtfully — balancing autonomy with oversight, and innovation with stability.

Agentic systems are still evolving, and so are the frameworks we need to build, validate, and govern them. The path forward will involve not just technical development, but continued research, collaboration, and iteration.

Discover everything you need to know to modernize your asset management strategy.

Inside, you’ll learn:

ActiveG, BPD Zenith, EAM Swiss, InterPro Solutions, Lexco, Peacock Engineering, Projetech, Sharptree, and ZNAPZ have united under one brand: Naviam.

You’ll be redirected to the most relevant page at Naviam.io in a few seconds — or you can

go now.